One of the most useful, but often misunderstood and misconfigured, features of NGINX is rate limiting. It allows you to limit the amount of HTTP requests a user can make in a given period of time. A request can be as simple as a GET request for the homepage of a website or a POST request on a log‑in form.

最有用的但又是最容易被错误理解和错误配置的NGINX特性就是rate limiting, 它允许你限制一个用户在给定时间段内的HTTP请求的数量,一个请求可以是一个简单的网页的主页的GET请求,或者是一个log-in表单的POST请求

Rate limiting can be used for security purposes, for example to slow down brute‑force password‑guessing attacks. It can help protect against DDoS attacks by limiting the incoming request rate to a value typical for real users, and (with logging) identify the targeted URLs. More generally, it is used to protect upstream application servers from being overwhelmed by too many user requests at the same time.

Rate limiting可以用于安全目的,比如用来减慢暴力(brute-force)破解密码攻击,他可以限制将要到来的请求速率到真实用户的典型值以防止DDos攻击。并且(通过日志)识别目标URLs,更广泛的说,它被用来保护上游的应用服务器不会被同一时间的太多的用户请求所淹没。

In this blog we will cover the basics of rate limiting with NGINX as well as more advanced configurations. Rate limiting works the same way in NGINX Plus.

在这个blog我将会介绍NGINX rate limiting的基础知识,以及高级配置,Rate limiting 在NGINX Plus的工作方式是一样的。

To learn more about rate limiting with NGINX, watch our on-demand webinar.

要了解更多的NGINX rate limiting, 请观看我们的点播网络研讨会。

NGINX Plus R16 and later support “global rate limiting”: the NGINX Plus instances in a cluster apply a consistent rate limit to incoming requests regardless of which instance in the cluster the request arrives at. (State sharing in a cluster is available for other NGINX Plus features as well.) For details, see our blog and the NGINX Plus Admin Guide.

NGINX Plus R16 以及之后的版本支持“全局rate limiting”:一个集群中的NGINX Plus实例对正进来的请求应用一个一致的rate limit, 不管请求到达的是集群中的哪一个实例。(一个集群中的状态共享对其他的NGINX plus特性也是可用的),详情请见我们的博客以及《NGINX Plus管理指南》。

NGINX Rate Limiting 是怎样工作的?(How NGINX Rate Limiting Works)

NGINX rate limiting uses the leaky bucket algorithm, which is widely used in telecommunications and packet‑switched computer networks to deal with burstiness when bandwidth is limited. The analogy is with a bucket where water is poured in at the top and leaks from the bottom; if the rate at which water is poured in exceeds the rate at which it leaks, the bucket overflows. In terms of request processing, the water represents requests from clients, and the bucket represents a queue where requests wait to be processed according to a first‑in‑first‑out (FIFO) scheduling algorithm. The leaking water represents requests exiting the buffer for processing by the server, and the overflow represents requests that are discarded and never serviced.

NGINX速率限制使用了漏桶算法,这种算法被广泛用于电信和分组交换计算机网络,以处理带宽有限时的突发性问题。这个比喻是一个水桶,水从顶部倒入,从底部漏出;如果倒入水的速度超过了漏水的速度,水桶就会溢出来。

在请求处理方面,水代表来自客户端的请求,而水桶代表一个队列,在这个队列中,请求按照先进先出(FIFO)的调度算法等待被处理。泄漏的水代表退出缓冲区供服务器处理的请求,而溢出的水代表被丢弃的、从未得到服务的请求。

配置基本的Rate Limiting(Configuring Basic Rate Limiting)

Rate limiting is configured with two main directives, and , as in this example:

Rate limiting是通过两个主要的指令配置的:limit_req_zone和limit_req,如本例所示:

limit_req_zone $binary_remote_addr zone=mylimit:10m rate=10r/s;

server {

location /login/ {

limit_req zone=mylimit;

proxy_pass http://my_upstream;

}

}The

limit_req_zonedirective defines the parameters for rate limiting whilelimit_reqenables rate limiting within the context where it appears (in the example, for all requests to /login/).

limit_req_zone指令定义了Rate Limiting的参数,当limit_req在它出现的上下文中启用Rate Limiting。(在本例中上下文是所有/login/请求)

The limit_req_zone directive is typically defined in the http block, making it available for use in multiple contexts. It takes the following three parameters:

limit_req_zone指令通常定义在http配置块中,可以在多个上下文中使用,这个指令有以下三个参数:

-

Key – Defines the request characteristic against which the limit is applied. In the example it is the NGINX variable $binary_remote_addr, which holds a binary representation of a client’s IP address. This means we are limiting each unique IP address to the request rate defined by the third parameter. (We’re using this variable because it takes up less space than the string representation of a client IP address, $remote_addr).

Key– 针对被应用的限制去定义请求特征,在这个例子就是指NGINX的$binary_remote_addr变量,这个变量可以持有一个用二进制表示的客户端IP地址,这意味着我们限制每一个独立IP地址的请求速率为第三个参数定义的值。(我们使用这个变量的原因是它比用string表示的客户端IP地址$remote_addr占用的空间少) -

Zone – Defines the shared memory zone used to store the state of each IP address and how often it has accessed a request‑limited URL. Keeping the information in shared memory means it can be shared among the NGINX worker processes. The definition has two parts: the zone name identified by the zone= keyword, and the size following the colon. State information for about 16,000 IP addresses takes 1 ;megabyte, so our zone can store about 160,000 addresses.

Zone– 定义了用来存储每个IP地址的状态和访问被限制URL的频率的共享内存区,存储在共享内存中的信息在NGINX工作进程间共享,这个定义分为两部分:区域的名称由zone=keyword确定,区域的大小在冒号之后,16000个IP地址的状态信息需要1M字节,因此我们的区域可以存储160000个地址(在这个示例中我们配置的是10M)。If storage is exhausted when NGINX needs to add a new entry, it removes the oldest entry. If the space freed is still not enough to accommodate the new record, NGINX returns status code 503 (Service Temporarily Unavailable). Additionally, to prevent memory from being exhausted, every time NGINX creates a new entry it removes up to two entries that have not been used in the previous 60 seconds.

如果存储空间被用完后又需要添加新的条目,他会移除最老的条目,如果腾出的空间仍不足以容纳新的记录,NGINX将会返回503状态码(服务临时不可用),此外,为了防止内存被耗尽,每次NGINX创建一个新的条目时会移除两个在前60s内没有使用的条目。

-

Rate – Sets the maximum request rate. In the example, the rate cannot exceed 10 requests per second. NGINX actually tracks requests at millisecond granularity, so this limit corresponds to 1 request every 100 milliseconds (ms). Because we are not allowing for bursts (see the next section), this means that a request is rejected if it arrives less than 100ms after the previous permitted one.

Rate– 设置最大请求速率,在这个例子中,请求速率不能超过10个每秒,NGINX实际上是以毫秒为粒度跟踪来请求,所以这个限制对应每100毫秒一个请求,因为我们不允许爆发式的请求(见下一节),这意味着如果两个请求之间间隔小于100毫秒,第二个请求将会被拒绝。

The limit_req_zone directive sets the parameters for rate limiting and the shared memory zone, but it does not actually limit the request rate. For that you need to apply the limit to a specific location or server block by including a limit_req directive there. In the example, we are rate limiting requests to /login/.

limit_req_zone指令设置了速率限制和共享内存区域,但是它并没有真正的限制请求速率,你需要将limit_req指令设置到具体的location或者server配置块才能使限速生效,在这个例子中看我们对/login/这个url进行了请求速率限制。

So now each unique IP address is limited to 10 requests per second for /login/ – or more precisely, cannot make a request for that URL within 100ms of its previous one.

现在对URL/login/限制为每个独立IP地址每秒10个请求,或者更精确的说,前一个请求后的100毫秒内不能对这个URL进行请求

Handling Bursts 应对突发流量

What if we get 2 requests within 100ms of each other? For the second request NGINX returns status code 503 to the client. This is probably not what we want, because applications tend to be bursty in nature. Instead we want to buffer any excess requests and service them in a timely manner. This is where we use the burst parameter to limit_req, as in this updated configuration:

location /login/ { limit_req zone=mylimit burst=20; proxy_pass http://my_upstream; }The burst parameter defines how many requests a client can make in excess of the rate specified by the zone (with our sample mylimit zone, the rate limit is 10 requests per second, or 1 every 100ms). A request that arrives sooner than 100ms after the previous one is put in a queue, and here we are setting the queue size to 20.

That means if 21 requests arrive from a given IP address simultaneously, NGINX forwards the first one to the upstream server group immediately and puts the remaining 20 in the queue. It then forwards a queued request every 100ms, and returns 503 to the client only if an incoming request makes the number of queued requests go over 20.

如果我们两次请求之间间隔小于100ms会怎么样?第二个请求NGINX回返回503状态码,这可能不是我们想要的,因为应用程序本质上是倾向于突发多请求的, 相反,我们希望缓冲任何多余的请求,并及时为它们提供服务。这就是我们使用limit_req的burst参数的地方,如这个新的配置:

location /login/ {

limit_req zone=mylimit burst=20;

proxy_pass http://my_upstream;

}burst参数定义了一个客户端可以发出多少个超过区域指定速率的请求(对于我们的样本mylimit区域,速率限制是每秒10个请求,或者每100ms一个)。在前一个请求之后早于100ms到达的请求会被放入一个队列,这里我们将队列大小设置为20。

这意味着如果有21个请求同时(simultaneously)从一个给定的IP地址到达,NGINX会立即将第一个请求转发到上游服务器组,并将其余20个请求放在队列中。然后,它每隔100ms转发一个队列中的请求,只有当一个传入的请求使队列中的请求数超过20个时,才会返回503给客户端。**

Queueing with No Delay 无延迟的排队

A configuration with burst results in a smooth flow of traffic, but is not very practical because it can make your site appear slow. In our example, the 20th packet in the queue waits 2 seconds to be forwarded, at which point a response to it might no longer be useful to the client. To address this situation, add the nodelay parameter along with the burst parameter:

location /login/ { limit_req zone=mylimit burst=20 nodelay; proxy_pass http://my_upstream; }With the nodelay parameter, NGINX still allocates slots in the queue according to the burst parameter and imposes the configured rate limit, but not by spacing out the forwarding of queued requests. Instead, when a request arrives “too soon”, NGINX forwards it immediately as long as there is a slot available for it in the queue. It marks that slot as “taken” and does not free it for use by another request until the appropriate time has passed (in our example, after 100ms).

Suppose, as before, that the 20‑slot queue is empty and 21 requests arrive simultaneously from a given IP address. NGINX forwards all 21 requests immediately and marks the 20 slots in the queue as taken, then frees 1 slot every 100ms. (If there were 25 requests instead, NGINX would immediately forward 21 of them, mark 20 slots as taken, and reject 4 requests with status 503.)

Now suppose that 101ms after the first set of requests was forwarded another 20 requests arrive simultaneously. Only 1 slot in the queue has been freed, so NGINX forwards 1 request and rejects the other 19 with status 503. If instead 501ms have passed before the 20 new requests arrive, 5 slots are free so NGINX forwards 5 requests immediately and rejects 15.

The effect is equivalent to a rate limit of 10 requests per second. The nodelay option is useful if you want to impose a rate limit without constraining the allowed spacing between requests.

Note: For most deployments, we recommend including the burst and nodelay parameters to the limit_req directive.

有突发的配置会使流量平稳流动,但不是很实用,因为它可能使你的网站显得很慢。在我们的例子中,队列中的第20个数据包要等待2秒钟才能被转发,这时对它的响应可能对客户端不再有用。为了解决这种情况,将nodelay参数与burst参数一起添加。

location /login/ {

limit_req zone=mylimit burst=20 nodelay;

proxy_pass http://my_upstream;

}有了nodelay参数,NGINX仍然根据burst参数分配队列中的插槽,并施加配置的速率限制,但不是通过间隔转发队列中的请求来进行。相反,当一个请求 "过早 "到达时,只要队列中有一个空位,NGINX就会立即转发它。它把这个空位标记为 "已被占用",直到适当的时间过去(在我们的例子中,100ms之后)才会释放出来供其他请求使用。

假设像以前一样,20个插槽的队列是空的,并且有21个请求同时从一个给定的IP地址到达。NGINX立即转发所有21个请求,并将队列中的20个插槽标记为已被占用,然后每100ms释放一个插槽(如果有25个请求,NGINX将立即转发其中的21个,将20个插槽标记为已被占用,并以503状态拒绝4个请求)。

现在假设在第一组请求被转发后101ms,又有20个请求同时到达。队列中只有一个空位,所以NGINX转发了一个请求,并以503状态拒绝了其他19个请求。如果在20个新的请求到达之前已经过了501ms,那么有5个空位,所以NGINX立即转发了5个请求,拒绝了15个。

其效果相当于每秒10个请求的速率限制。如果你想在不限制请求之间允许的间隔的情况下施加速率限制,nodelay选项很有用。

注意:对于大多数部署,我们建议在limit_req指令中包括burst和nodelay参数。

Two-Stage Rate Limiting 两阶段限速

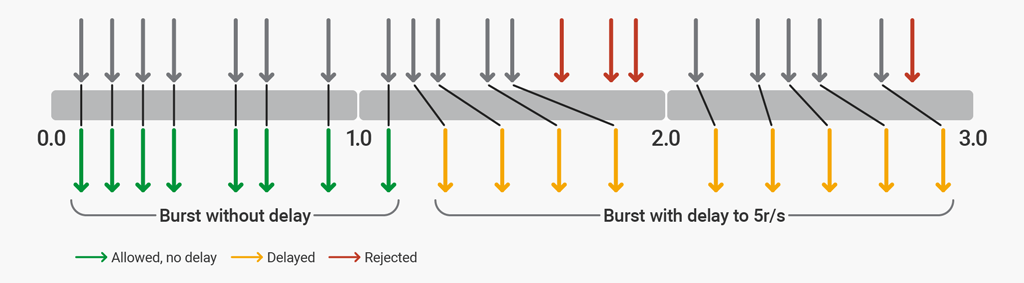

With NGINX Plus R17 or NGINX Open Source 1.15.7 you can configure NGINX to allow a burst of requests to accommodate the typical web browser request pattern, and then throttle additional excessive requests up to a point, beyond which additional excessive requests are rejected. Two-stage rate limiting is enabled with the delay parameter to the limit_req directive.

To illustrate two‑stage rate limiting, here we configure NGINX to protect a website by imposing a rate limit of 5 requests per second (r/s). The website typically has 4–6 resources per page, and never more than 12 resources. The configuration allows bursts of up to 12 requests, the first 8 of which are processed without delay. A delay is added after 8 excessive requests to enforce the 5 r/s limit. After 12 excessive requests, any further requests are rejected.

使用NGINX Plus R17或NGINX Open Source 1.15.7,你可以将NGINX配置为允许突发请求以适应典型的Web浏览器请求模式,然后节制额外的过量请求,直到一个点,超过这个点,额外的过量请求将被拒绝。两阶段速率限制是通过limit_req指令的延迟参数启用的。

为了说明两阶段速率限制,我们在这里配置NGINX,通过施加每秒5个请求(r/s)的速率限制来保护一个网站。该网站通常每页有4-6个资源,而且不超过12个资源。该配置允许最多12个请求的爆发,其中前8个请求的处理没有延迟。在8个过量的请求之后,会增加一个延迟,以执行5r/s的限制。在12个过度请求之后,任何进一步的请求都会被拒绝。

limit_req_zone $binary_remote_addr zone=ip:10m rate=5r/s;

server {

listen 80;

location / {

limit_req zone=ip burst=12 delay=8;

proxy_pass http://website;

}

}The delay parameter defines the point at which, within the burst size, excessive requests are throttled (delayed) to comply with the defined rate limit. With this configuration in place, a client that makes a continuous stream of requests at 8 r/s experiences the following behavior.

延迟参数定义了在突发规模内,过多的请求被节制(延迟)以符合定义的速率限制的时间点。有了这个配置,一个以8r/s的速度连续发出请求的客户端会出现以下行为。

rate=5r/s burst=12 delay=8The first 8 requests (the value of delay) are proxied by NGINX Plus without delay. The next 4 requests (burst – delay) are delayed so that the defined rate of 5 r/s is not exceeded. The next 3 requests are rejected because the total burst size has been exceeded. Subsequent requests are delayed.

前8个请求(延迟值)由NGINX Plus代理,没有延迟。接下来的4个请求(burst – delay)被延迟,以便不超过5r/s的定义速率。接下来的3个请求被拒绝,因为已经超过了总突发量。后续的请求被延迟。

参考文档:https://www.nginx.com/blog/rate-limiting-nginx/